How to handle dependant Entities through SQL and Databricks Data Product¶

Information

Take into account that this tutorial can be used as an illustrative guide for Sidra's versions. Due to its nature subject of continuous development, there may be minor changes to the displayed code. For more information, you can contact our Sidra's Support Team.

This tutorial covers a series of step-by-step scenarios about using dependant Entities and the usage of the SQL and Databricks Sidra Data Product.

Please refer to this documentation page about the Sync Mode for more details about the specific aspects of Data Product architecture or related ADF orchestration pipeline.

This guide covers some possible end to end scenarios of data intake in Sidra that contain related Entities, and how the Staging tables will look like after the orchestration through the specific client ADF pipeline, used to orchestrate the movement of data from the Data Lake to the Data Product staging tables.

1. Configure Sidra Service¶

Configure the data source in Sidra Service from origin tables¶

As origin tables, we will use the Microsoft-provided Adventure Works as sample database, where we will add some modifications to showcase the scenarios that we need to cover.

Below, a list of the resources to use for this tutorial is shown.

Provider, Entities and Attributes information¶

The data related to the Provider and Entities to be created in Sidra Service for the source are the following:

- Provider/ProviderName:

Support - Entities/TableName:

support_SalesLT_Product,support_SalesLT_SalesOrderHeader,support_SalesLT_SalesOrderDetail - Attributes (simplified):

ProductID,Name,LoadDate,IdSourceItem

Tables¶

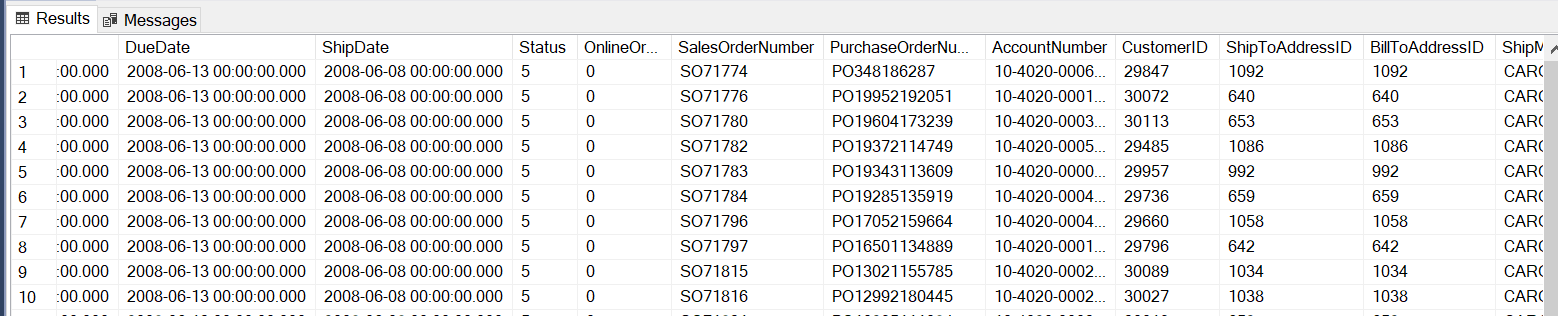

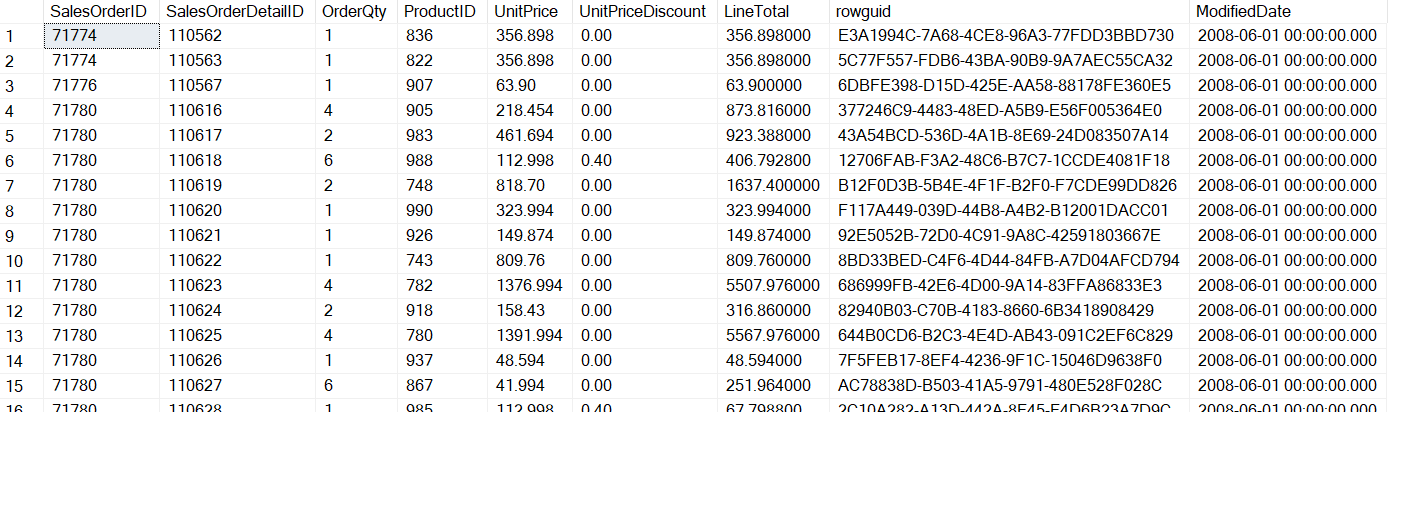

In the original source we will focus on three tables:

-

[SalesLT].[SalesOrderHeader]This table represents a sales order header table, with one row per order. Each field will represent a property of the sales order. This table will be loaded with incremental load with Change Tracking activated. Below is the instruction to activate change tracking in this table in the origin table:

-

[SalesLT].[SalesOrderDetail]This table represents a sales order detail table, with several rows per order. Each field represents a property of a line item in each sales order. This table will be loaded with incremental load with Change tracking activated.

Below is the instruction to activate change tracking in this table in the origin table:

-

[SalesLT].[Product]This table represents a product that is contained in item list in any sales order. This table will be loaded with full load.

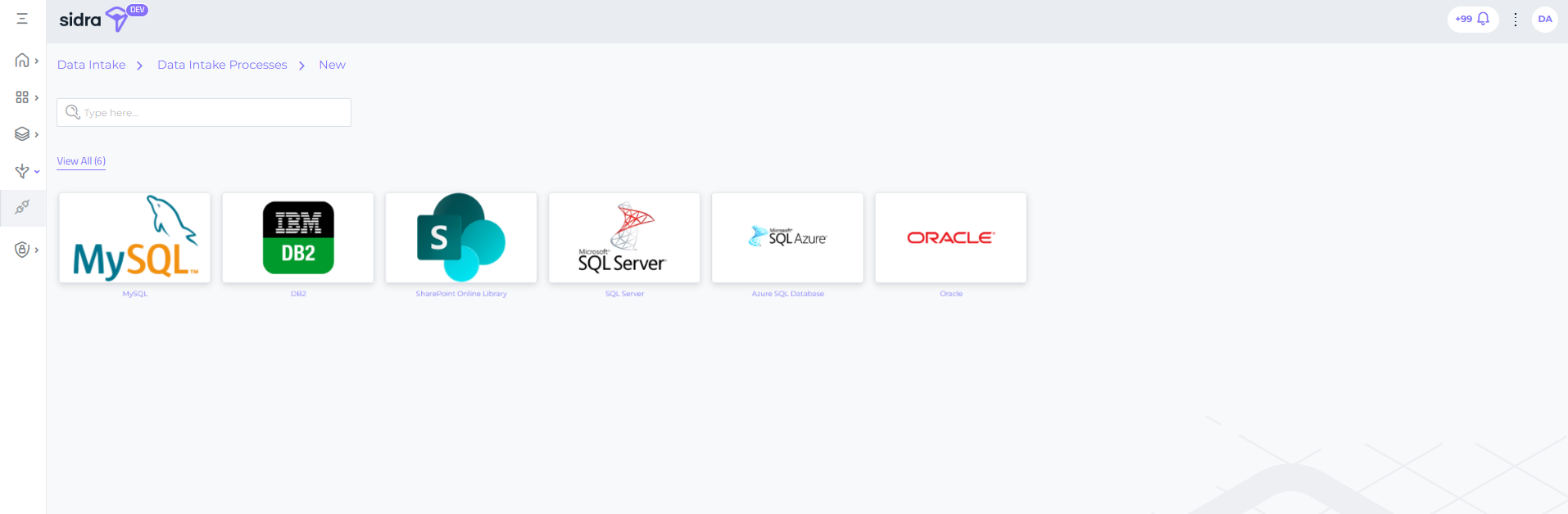

Data Intake Processes creation¶

Once these settings have been activated in the source, we just need to create the Data Intake Process in Sidra Service, following the Connector Plugins wizard. For more details on how to configure a Data Intake Process through the UI, please check this page.

For this tutorial, we will choose Azure SQL Database connector from the gallery, and use the connection string for Adventure Works database. Also, for the purpose of this tutorial, the new Provider created will be called Support.

Data Factory pipelines settings¶

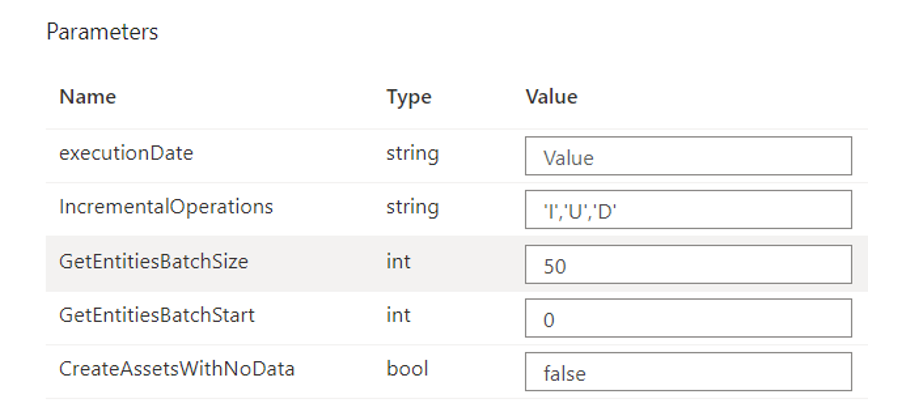

There is a parameter in the Sidra Service Data Intake pipeline (data extraction pipeline) that instructs the pipeline to create empty Assets (also known as dummy Assets) with every incremental load, even if there are no actual real data increments being loaded from the source system. This is needed so the Sync webjob in the Data Product can see these Assets and not stop the execution of the Data Product pipeline. The Data Product pipeline will take these Assets and update the Data Product staging tables with these new Assets. If this setting is set to false, this means that the Assets would not be generated, therefore the Sync job will not execute the Data Product pipeline, so there would not be any update in the Data Product staging table.

For checking or changing this setting, please go to the data extraction pipeline parameters in the DB and include the following line:

After adding or updating this setting, please re-deploy the Sidra Service Data Intake pipeline using the API:

https://[yourWebAPI].azurewebsites.net/api/datafactory/pipelines/[IdPipeline]/deploy?api-version=1.0

The parameters field in the Data Intake Sidra Service pipeline should be similar to this:

{

"Pipeline.ItemId": "XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX",

"ProviderItemId": "XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX",

"folder": "Support",

"Pipeline.Name.NoSpaces": "ExtractData_Support",

"sqlDatabaseLinkedServiceName": "SupportAzureSql",

"extractDatasetSourceType": "AzureSqlSource",

"extractDatasetType": "AzureSqlTable",

"createAssetsWithNoData": "true",

"ConnectorId": "da25f746-02dd-44d4-9e65-b11cb1bf2f99",

"ConnectorVersion": "3.1.2",

"ConnectorVersionId": "19766eda-3dbc-4c74-97a1-01d31e239a31"

}

Key considerations

- What is the value of the parameters in the Data Intake (Sidra Service) pipeline CreateAssetsWithNoData (create Assets if there is no data).

- If this value is False, it means that if there are no new increments for an Entity, the data extraction pipeline in Sidra Service will not generate any Asset in Sidra Service, therefore the Data Product will not see anything new.

- If this value is True, it means that even if there are no new increments for an Entity, the data extraction pipeline in Sidra Service will DO generate an Asset (empty Asset or dummy Asset) in Sidra Service. The Data Product Sync will therefore synchronize this as an existing Asset.

- What is the behavior of the IsMandatory field in the EntityPipeline table in the Data Product database.

- The pipeline will not run until there are Assets for all Entities marked as mandatory. Even if there are successful loads in the Data Lake for some of these Entities, the Sync will not create Assets in the Client DB and execute the pipeline.

- What is the Sync behavior configured in the Pipeline table in the Data Product.

- In the case that the behavior is LoadAllNewDates, in case there are some days where there are not all mandatory Entities for some days, the day when all the mandatory Entities are in the Data Lake, there will be missed Assets from these previous days.

- In the case that the behavior is LoadPendingUpToValidDate, in case there are some days where there are not all mandatory Entities for some days, the day when all the mandatory Entities are there, there will be a catch-up of all successfully loaded Entities in the Data Lake from previous days.

2. Configure Data Product¶

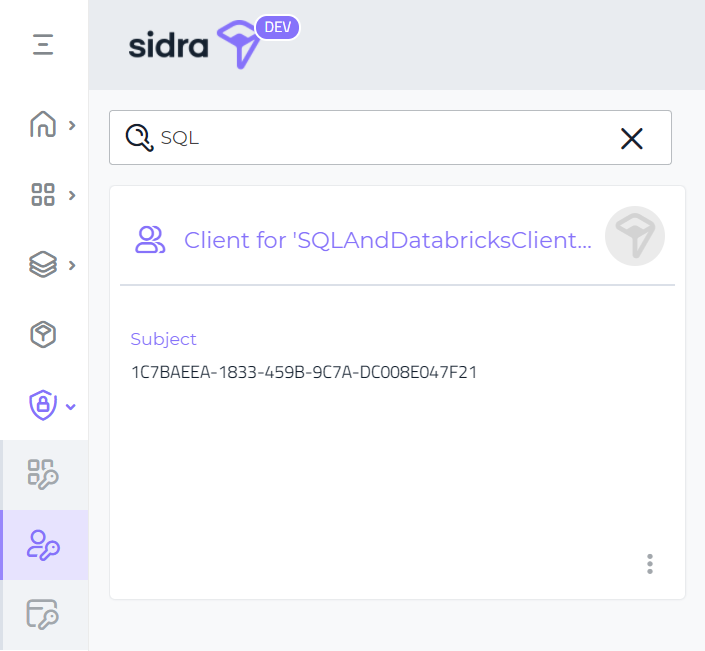

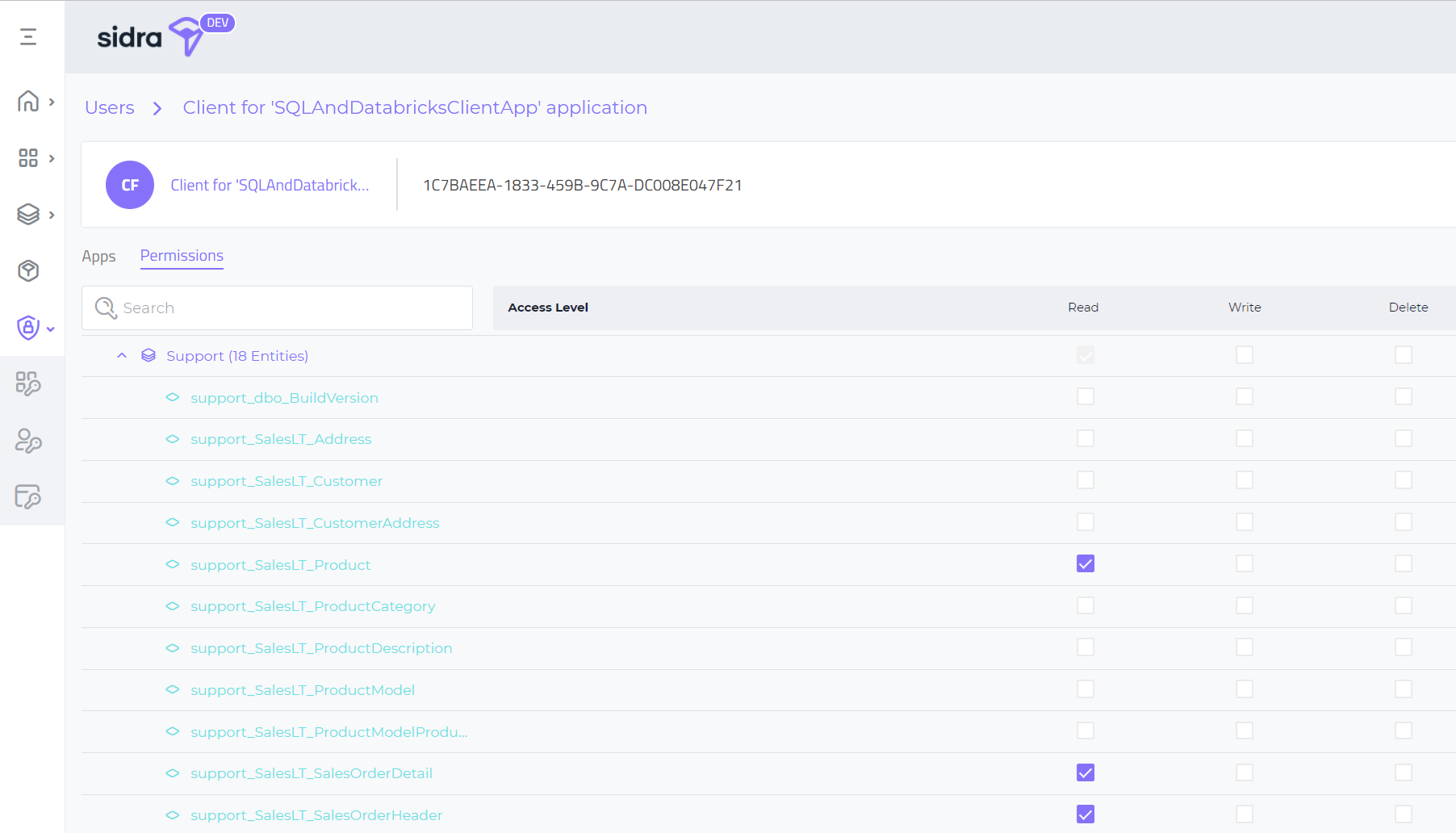

Assign permissions to the Data Product¶

For the purpose of this tutorial, the SQL and Databricks Data Product we will use will be called SQLAndDatabricksClientApp.

We need to give this Data Product permissions on the following Entities: support_SalesLT_Product, support_SalesLT_Product and support_SalesLT_Product.

For doing this, please go to the Authorizations > Apps > SQLAndDatabricksClientApp:

Then, click on the Data Product and go to the Permissions tab. Look for the Support Provider and check the above Entities:

Configure the pipeline in the Data Product¶

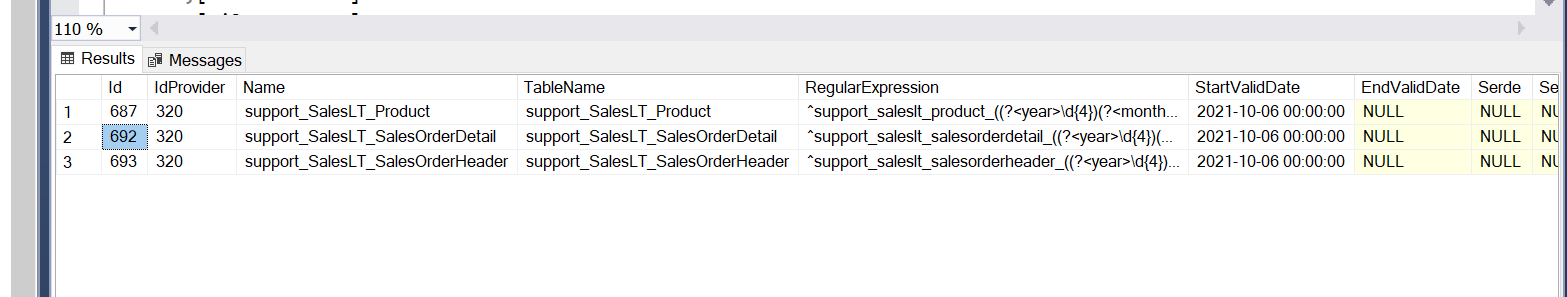

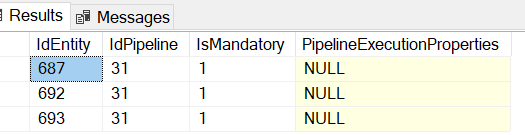

These will be the Entities that the Sync job in the Data Product will synchronize in the Data Product metadata DB:

After giving permissions to this Data Product on these Entities, we need to configure the Data Product pipeline. The Pipeline Sync behaviour will be LoadPendingUpToValidDate. Please refer to this page for more background on this topic.

--- CONFIGURATION

DECLARE @IdProvider INT =

(

SELECT [Id]

FROM [Sidra].[Provider]

WHERE [ProviderName] = 'Support'

)

DECLARE @ItemIdPipelineDSUExtraction UNIQUEIDENTIFIER = newid()

DECLARE @IdPipelineTemplateDSUExtraction INT =

(

SELECT [Id]

FROM [Sidra].[PipelineTemplate]

WHERE [ItemId] = '<PIPELINE TEMPLATE ID>'

)

DECLARE @IdDataFactory INT = 1

DECLARE @IdSyncBehaviour INT = 1

--0 Ignored

--1 LoadAllNewDates

--2 LoadFirstNewDate

--3 LoadLastNewDate

--4 LoadPendingUpToValidDate

--- ROLLBACK

DELETE FROM [Sidra].[EntityPipeline] WHERE [IdPipeline] IN (SELECT [Id] FROM

[Sidra].[Pipeline] WHERE [ItemId] = @ItemIdPipelineDSUExtraction)

DELETE FROM [Sidra].[Pipeline] WHERE [ItemId] = @ItemIdPipelineDSUExtraction

--- PIPELINE CREATION

INSERT INTO [Sidra].[Pipeline]

([ItemId], [Name], [ValidFrom], [ValidUntil],

[IdTemplate], [LastUpdated], [LastDeployed], [IdDataFactory], [IsRemoved],

[IdPipelineSyncBehaviour], [Parameters],

[ExecutionParameters])

VALUES

(@ItemIdPipelineDSUExtraction, 'DSUExtractionToDatabricksScalingDB', '2021-10-24', NULL,

@IdPipelineTemplateDSUExtraction, GETUTCDATE(), NULL, @IdDataFactory, 0,

@IdSyncBehaviour, '{"storedProcedureName":"[staging].[Orchestrator]"}','"scaleUpCapacity":"<CAPACITY E.G. 50>",

"scaleUpTierName":"<TIER NAME E.G. S2>"

}')

DECLARE @IdExtractPipeline INT =

(

SELECT [Id]

FROM [Sidra].[Pipeline]

WHERE [ItemId] = @ItemIdPipelineDSUExtraction

)

Next, we need to configure the Entity-Pipeline association for this Data Product, by inserting a relationship between the Entities and this pipeline.

INSERT INTO [Sidra].[EntityPipeline]

([IdEntity], [IdPipeline], [IsMandatory])

SELECT Id AS [IdEntity], @IdPipelineDSUExtraction, 1

FROM [Sidra].[Entity]

WHERE IdProvider = @IdProvider

You can see more information about the above scripts in the documentation for creating a new Data Product.

We can see how in the Entity-Pipeline relationship table, we have defined the above three Entities as mandatory:

SELECT TOP (1000) [IdEntity],

[IdPipeline],

[IsMandatory],

[PipelineExecutionProperties]

FROM [Sidra].[EntityPipeline]

Configure StagingConfiguration table¶

Next, we need to configure the StagingConfiguration table to configure the new tables or views and the queries to create these tables or views. Databricks will run these queries on the data to create these new tables in the staging schema.

The StagingConfiguration table is needed in order to parametrize these queries in the Databricks notebook.

You can see more info in the SQL and Databricks Sidra Data Product page.

Case 1. Create a staging table using the default configuration mode¶

INSERT INTO [Sidra].[StagingConfiguration]

([IdPipeline], [Query],

[IdEntity], [SchemaName],

[TableName], [TableFieldDefinition])

VALUES

-- [SalesLT].[Product] (By Default)

(@IdPipelineDSUExtraction, NULL,

687, NULL,

NULL, NULL)

-- [SalesLT].[SalesOrderHeader]

, (@IdPipelineDSUExtraction,'SELECT H.SalesOrderId, H.OrderDate, H.SalesOrderNumber,

H.ShipMethod, H.SubTotal, D.SalesOrderDetailId, D.OrderQty, D.UnitPrice, D.LineTotal

FROM (SELECT * FROM support.support_SalesLT_SalesOrderHeader TIMESTAMP AS OF

"<<TIMESTAMP.support.support_SalesLT_SalesOrderHeader>>") H

LEFT JOIN (SELECT * FROM support.support_SalesLT_SalesOrderDetail TIMESTAMP AS OF

"<<TIMESTAMP.support.support_SalesLT_SalesOrderDetail>>") D

ON H.SalesOrderId = D.SalesOrderId WHERE H.IdSourceItem IN (<<ASSETID.support.support_SalesLT_SalesOrderHeader>>)',

NULL, NULL,

'support_SalesLT_SalesOrderHeaderWithDetails', '[SalesOrderID] [int] NULL,[OrderDate] [datetime] NULL,

[SalesOrderNumber] [nvarchar](25) NULL,[ShipMethod] [nvarchar](50) NULL,[SubTotal] [money] NULL,

[SalesOrderDetailID] [int] NULL,[OrderQty] [smallint] NULL,[UnitPrice] [money] NULL,[LineTotal] [numeric](18, 0) NULL')

-- [SalesLT].[SalesOrderDetail]

, (@IdPipelineDSUExtraction, 'SELECT H.SalesOrderId, H.OrderDate, H.SalesOrderNumber,

H.ShipMethod, H.SubTotal, D.SalesOrderDetailId, D.OrderQty, D.UnitPrice, D.LineTotal

FROM (SELECT * FROM support.support_SalesLT_SalesOrderDetail TIMESTAMP AS OF

"<<TIMESTAMP.support.support_SalesLT_SalesOrderDetail>>") D

LEFT JOIN (SELECT * FROM support.support_SalesLT_SalesOrderHeader TIMESTAMP AS OF

"<<TIMESTAMP.support.support_SalesLT_SalesOrderHeader>>") H

ON H.SalesOrderId = D.SalesOrderId WHERE D.IdSourceItem IN (<<ASSETID.support.support_SalesLT_SalesOrderDetail>>)',

NULL, NULL,

'support_SalesLT_SalesOrderDetailWithHeaders', '[SalesOrderID] [int] NULL, [OrderDate] [datetime] NULL,

[SalesOrderNumber] [nvarchar](25) NULL,[ShipMethod] [nvarchar](50) NULL,[SubTotal] [money] NULL,

[SalesOrderDetailID] [int] NULL,[OrderQty] [smallint] NULL,[UnitPrice] [money] NULL,[LineTotal] [numeric](18, 0) NULL')

So, basically, for the purpose of this example, we will create two new views in Staging:

support_SalesLT_SalesOrderHeaderWithDetails, with thesupport_SalesLT_SalesOrderHeaderleft joining thesupport_SalesLT_SalesOrderDetailsupport_SalesLT_SalesOrderDetailWithHeaders, with thesupport_SalesLT_SalesOrderDetailleft joining thesupport_SalesLT_SalesOrderHeader

Case 2. Create a custom staging table using the combination of two Entities¶

In this case, we are not using the default configuration mode for the StagingConfiguration table, we need to populate the following fields: Query, TableName and TableFieldDefinition:

INSERT INTO [Sidra].[StagingConfiguration]

([IdPipeline], [Query],

[IdEntity], [SchemaName],

[TableName], [TableFieldDefinition])

VALUES

, (@IdPipelineDSUExtraction, 'SELECT H.SalesOrderId, H.OrderDate, H.SalesOrderNumber,

H.ShipMethod, H.SubTotal, D.SalesOrderDetailId, D.OrderQty, D.UnitPrice, D.LineTotal

FROM (SELECT * FROM support.support_SalesLT_SalesOrderHeader TIMESTAMP AS OF

"<<TIMESTAMP.support.support_SalesLT_SalesOrderHeader>>") H

LEFT JOIN (SELECT * FROM support.support_SalesLT_SalesOrderDetail TIMESTAMP AS OF

"<<TIMESTAMP.support.support_SalesLT_SalesOrderDetail>>") D

ON H.SalesOrderId = D.SalesOrderId WHERE H.IdSourceItem IN (<<ASSETID.support.support_SalesLT_SalesOrderHeader>>)'

, NULL, NULL,

'support_SalesLT_SalesOrderHeaderWithDetails', '[SalesOrderID] [int] NULL,[OrderDate] [datetime] NULL,

[SalesOrderNumber] [nvarchar](25) NULL,[ShipMethod] [nvarchar](50) NULL,[SubTotal] [money] NULL,

[SalesOrderDetailID] [int] NULL,[OrderQty] [smallint] NULL,[UnitPrice] [money] NULL,[LineTotal] [numeric](18, 0) NULL')

The result will be:

- A staging table will be created, with

Stagingas a schema (default when the valueSchemaNameis not specified). The table name will be specified by the value inTableName, and the columns for the table will be defined by theTableFieldDefinitioncolumn. - The staging table will be populated executing the query defined in

Querycolumn:

SELECT H.SalesOrderId, H.OrderDate, H.SalesOrderNumber, H.ShipMethod,

H.SubTotal, D.SalesOrderDetailId, D.OrderQty, D.UnitPrice, D.LineTotal

FROM (SELECT * FROM support.support_SalesLT_SalesOrderHeader TIMESTAMP AS OF

"<<TIMESTAMP.support.support_SalesLT_SalesOrderHeader>>") H

LEFT JOIN (SELECT * FROM support.support_SalesLT_SalesOrderDetail TIMESTAMP AS OF

"<<TIMESTAMP.support.support_SalesLT_SalesOrderDetail>>") D

ON H.SalesOrderId = D.SalesOrderId

WHERE H.IdSourceItem IN (<<ASSETID.support.support_SalesLT_SalesOrderHeader>>)

, where:

<<TIMESTAMP.??>>and<<ASSETID.??>>are placeholders that should be filled by the process, according the Assets that the pipeline is loading.- For the creation of custom queries the placeholder available are:

<<TIMESTAMP.{provider-databaseName}.{entity-tableName}>><<ASSETID.{provider-databaseName}.{entity-tableName}>>

Once configured the staging tables, we need to deploy the pipeline in Azure Data Factory through the DataFactoryManager webjob, inside the Data Product resources group. Details about how to do this deployment can be found here. A DataLaketoStaging activity in the pipeline will execute the StagingConfiguration done above, bringing in the data from the Sidra Service to Data Product. After this, we will not need to execute the pipeline manually since the Sync webjob will do this for us, synchronizing the data between Sidra Service and the Data Product.

3. Scenarios¶

There are some key considerations to take into account about the scenarios:

- Note that the scenarios outlined below are progressive and successive scenarios, one after another. They should not be interpreted as alternative scenarios.

- As a reminder, note that the data shown in these scenarios, which synchronize between Data Lake and the Data Product, are coming from previous Data Intake Processes configured by the Sidra UI and ingested later on the Data Lake (DSU or Sidra Service).

- We will add some modifications directly in the database in order to showcase the scenarios that we need to cover.

Scenario 1¶

This scenario just describes the simplest of the scenarios, where there is an initial load for all the tables, then there are a set of incremental loads with some changes, on a couple of different days.

Scenario 1.1. Initial load for all tables¶

On Day 1 (AssetDate = 2021-11-19), we perform an initial load on Sidra Service on date 2021-11-10 for all three Entities.

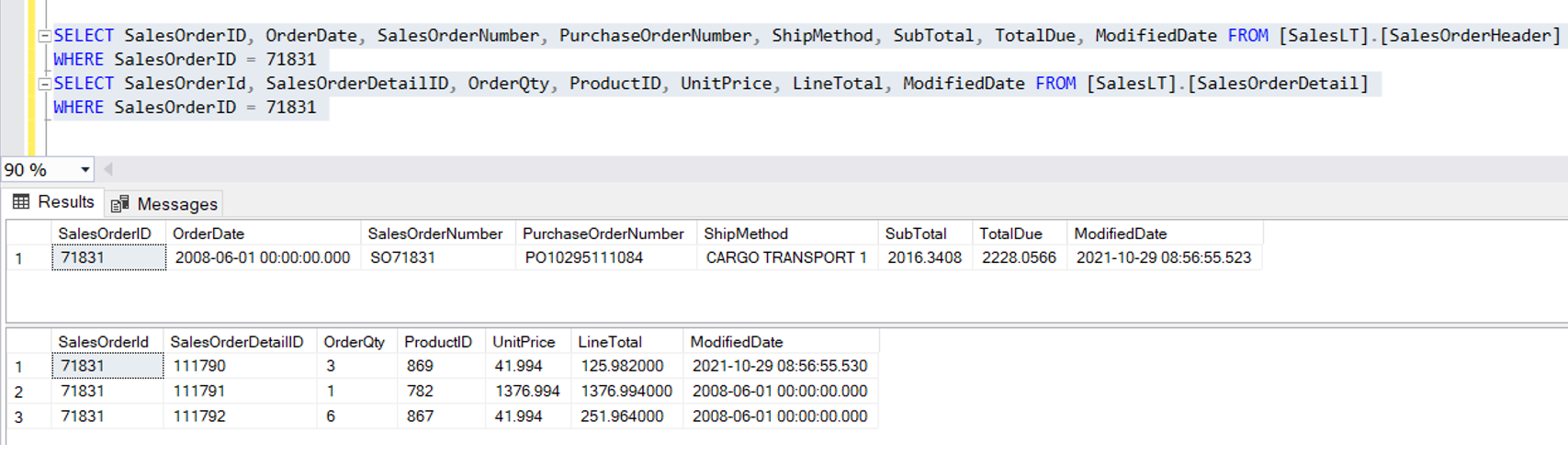

This is the data for a particular Sales Order Id in Adventure Works source tables:

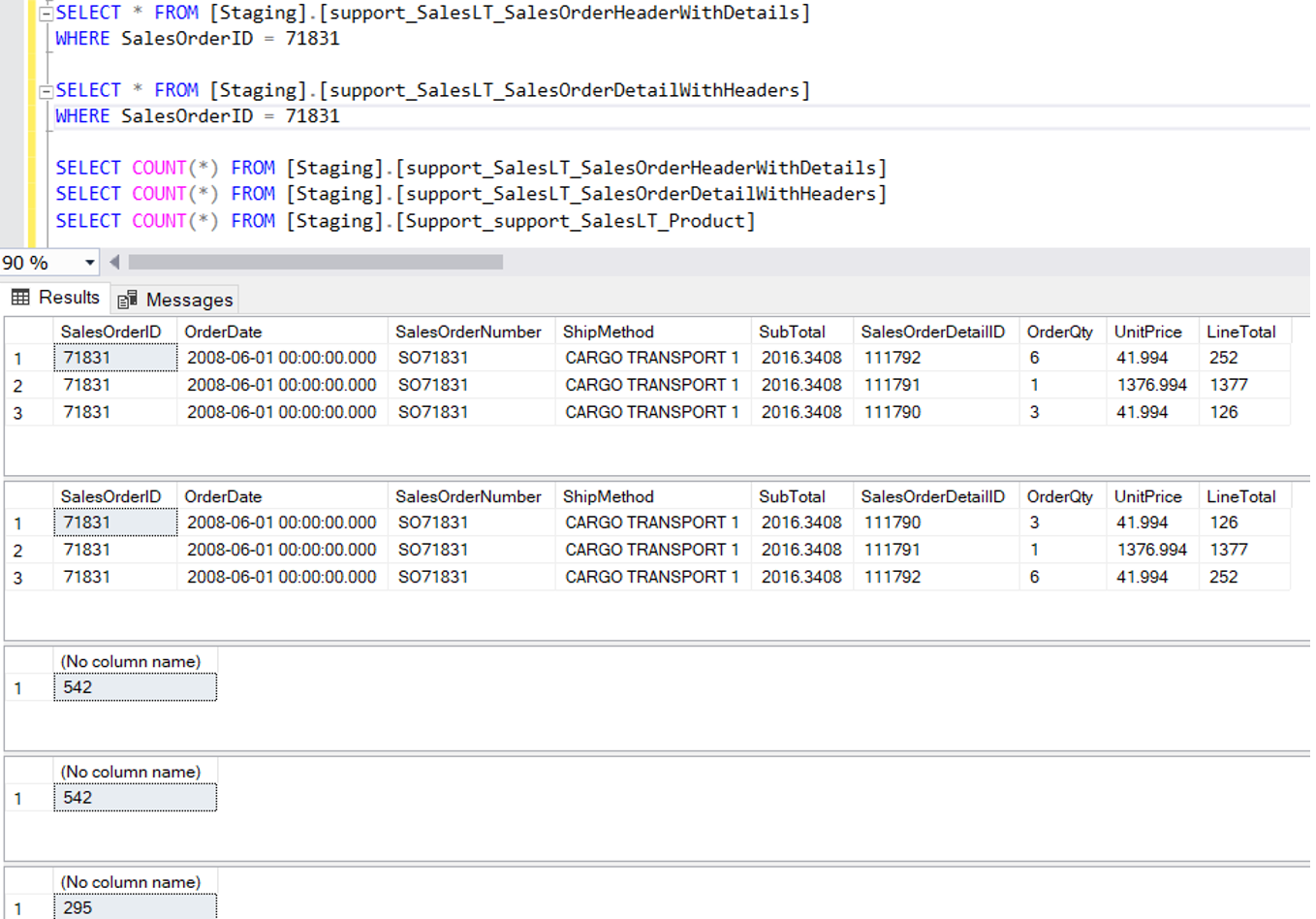

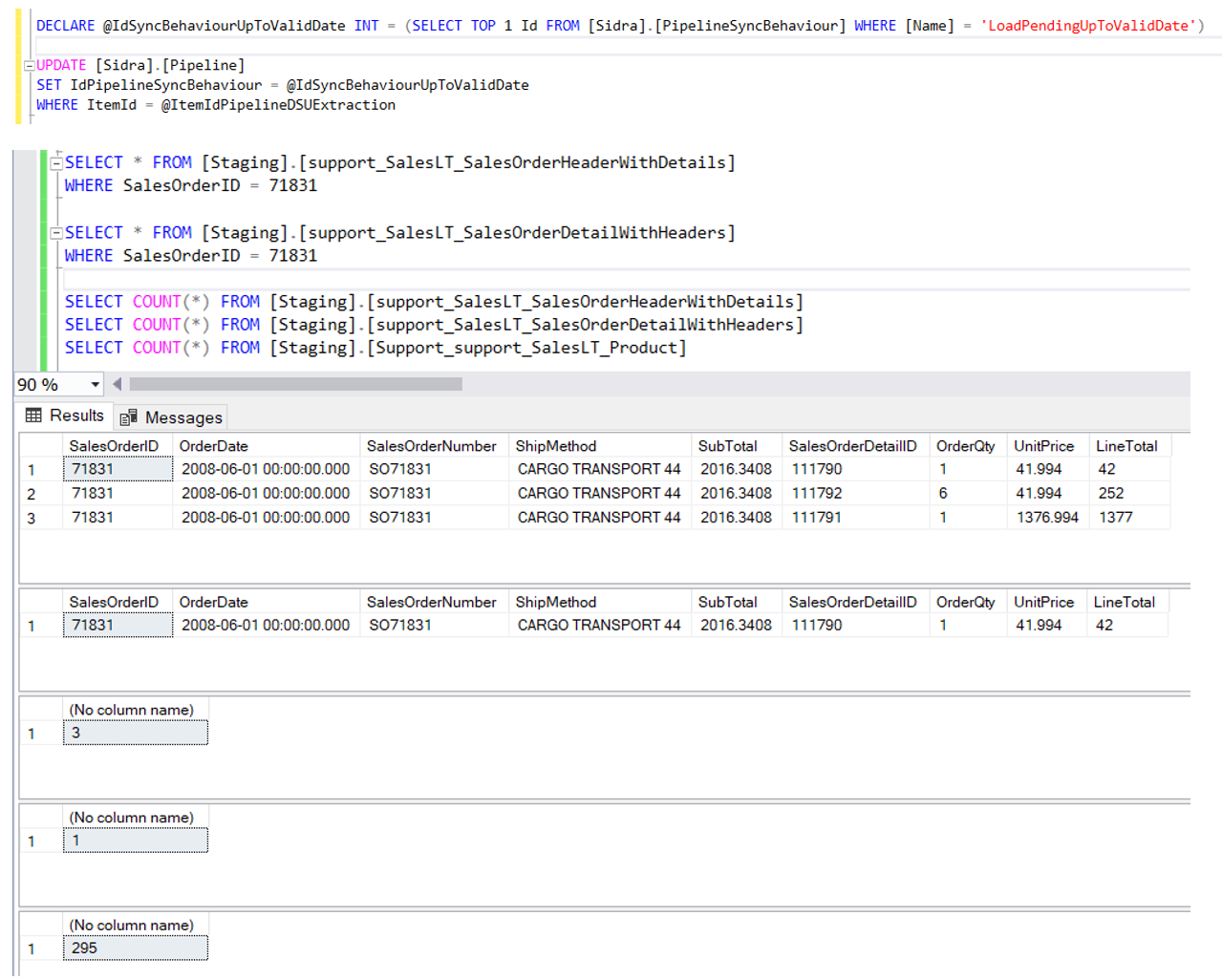

Once this data is synchronized with the Data Product, this is the data for the Data Product staging tables (Day 1, so AssetDate: 2021-11-10), as well as the number of rows:

In the Data Product staging tables, we can see how the two created staging tables just differ on the row order of its elements as given by the order of the left join operation. The count of rows for these two created staging tables is the same.

Scenario 1.2. New incremental load after changes in Header, but not in Detail¶

The AssetDate for this scenario is 2021-11-11. This is the Day 2.

On this day 2, we perform an incremental load.

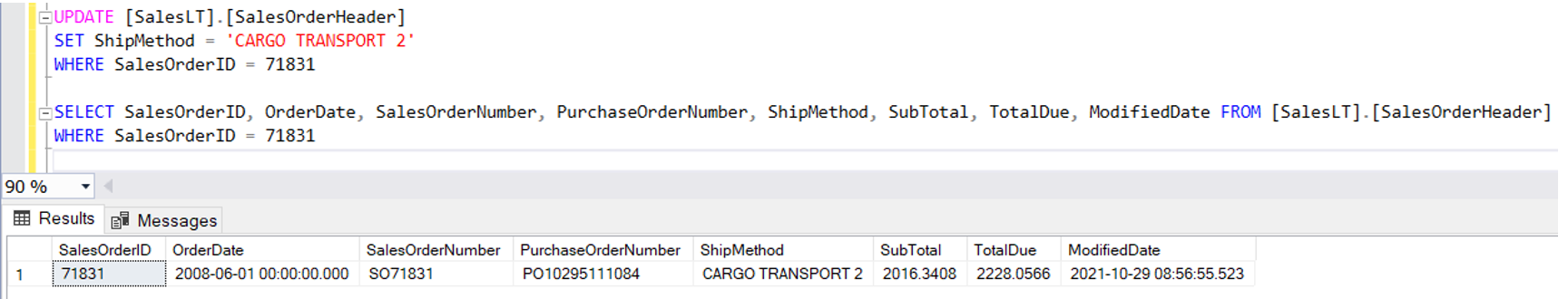

This is the update performed in the source tables:

We can see that data in the Header table in the source has changed, but not the Detail table. We can see the changes in the ShipMethod column in the source system, in the SalesOrderHeader table.

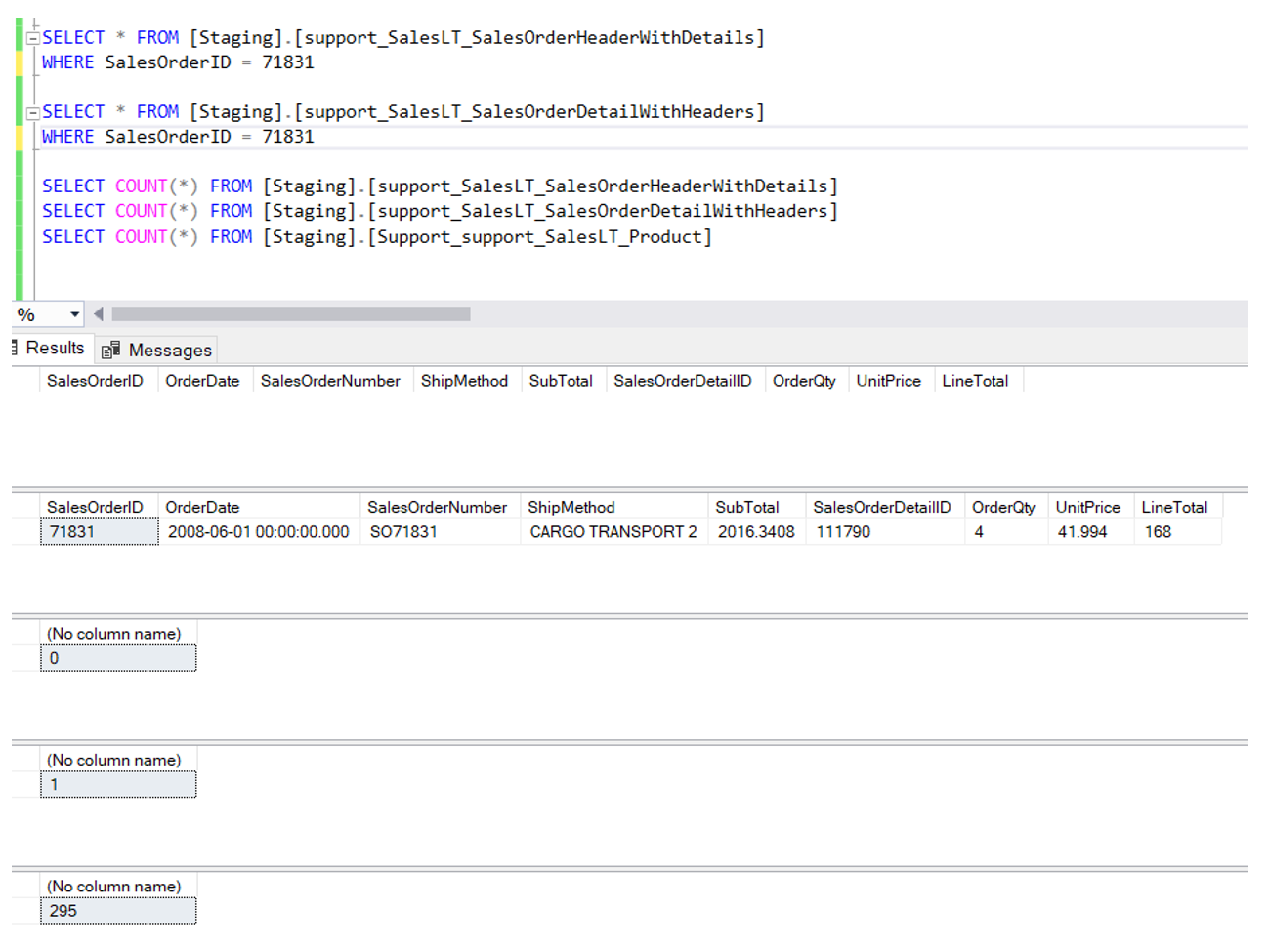

If we check the staging tables in the Data Product, we can see the following:

In this case, we can see:

- There are three records in the

SalesOrderHeaderWithDetailsstaging table, corresponding to the change in the Header table affecting three records. - There are no records in the

SalesOrderHeaderWithDetailsstaging table, as there was not any change in the Detail table.

Scenario 1.3. New incremental load after changes in Detail, but not in Header¶

The AssetDate for this scenario is 2021-11-12. This is the Day 3.

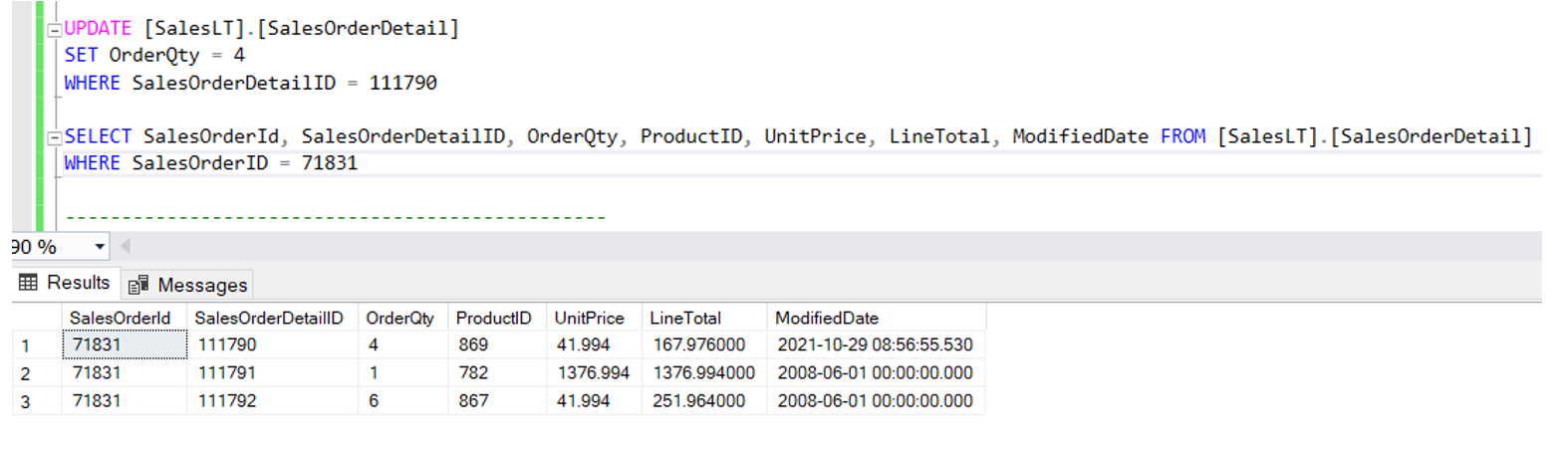

On this day 3, we have perform and incremental load after Day 2, and in this case there are changes in the Detail table in the source:

We can see the changes in the table OrderQty in the SalesOrderDetail table, the value has been set back to 4 from the original value of 3 for a specific SalesOrderDetailId.

In this case we will see the following in the staging tables of the Data Product:

In this case, we can see:

- There are no records in the staging table

SalesOrderHeaderWithDetails, as there are no new Assets for the Header table. - There is one record in

SalesOrderDetailWithHeader, because of this change in theSalesOrderDetailtable.

The scenarios below are new scenarios, alternative to this scenario 1. The purpose with the below scenarios is to reflect different possibilities happening with the data intake, such as errors, or Assets not being generated, and how the Sync behavior of the pipeline and the defined queries for the staging tables behave.

Scenario 2¶

Scenario 2.1. Accumulated loads. Some loads fail, and the Sync does not execute the Data Product pipeline¶

In this scenario we will change the configuration of the data extraction pipeline so that:

This setting is done just to simulate a failure condition in the Data Intake Process in Sidra Service, that results in no Assets being generated (not even empty).

In this scenario, these are the events that we simulate:

- There is a change (increment) on Header on Day 1 (AssetDate=

2021-11-05), but no increment on Detail. The Detail increment does not generate an Asset (because of the empty Asset configuration or because of a real failure of the pipeline). On this Day 1, as a consequence, there is no Data Product pipeline execution happening, as no Asset has been generated. This is because both Assets are marked as mandatory and the Sync does not execute the Data Product pipeline if any of the mandatory Assets are not there. - There is a change (increment) on Detail on Day 2 (AssetDate=

2021-11-06), but no increment on Header. The Header increment does not generate and Asset (because of the empty Asset configuration or because of a real failure of the pipeline). On this Day 2, as a consequence, there is no Data Product pipeline execution happening, as no Asset has been generated. This is because both Assets are marked as mandatory and the Sync does not execute the Data Product pipeline if any of the mandatory Assets are not there. - On day 3 (AssetDate=

2021-11-07), there is a change for Header and Detail, so we will load the accumulated load of these three days.

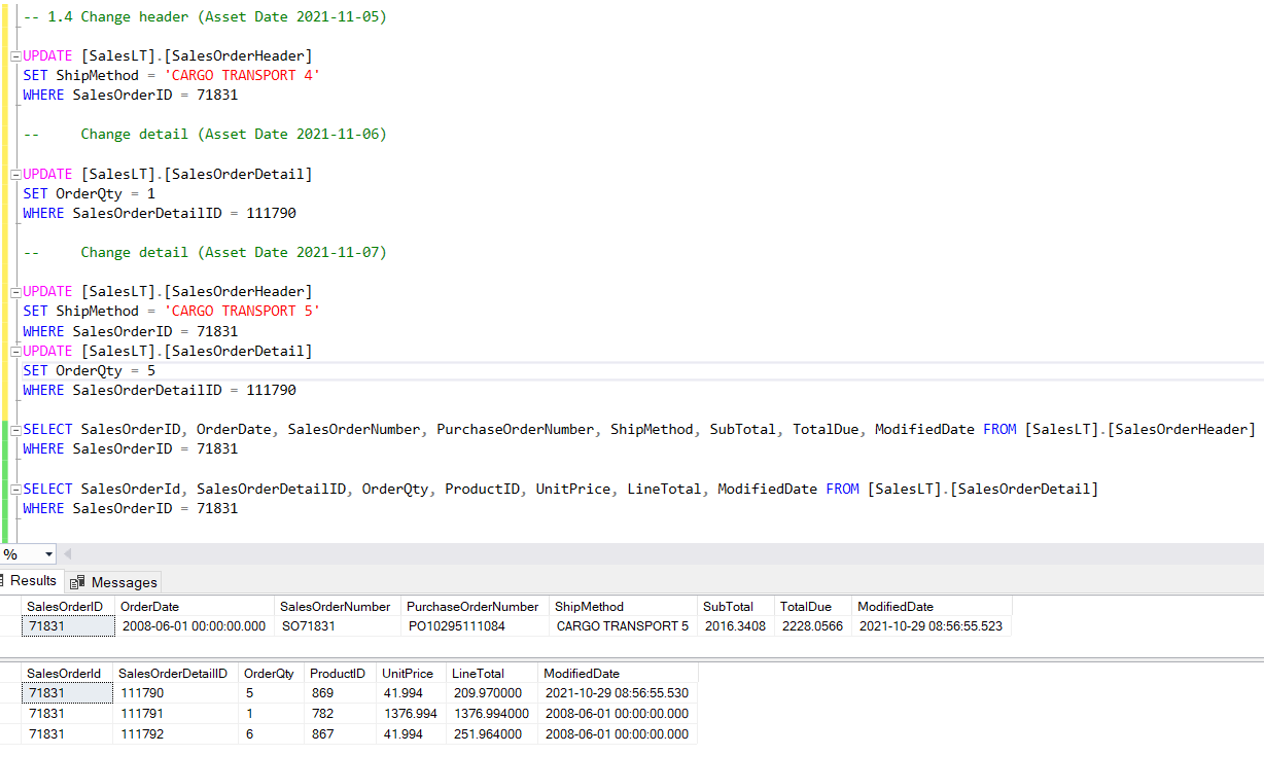

These are the changes in the source tables:

In this case, on Day 3 we can see the following in the staging tables of the Data Product:

- The Header table most up to date is from Day 3, so it will bring the Details corresponding to this day.

- The Detail table most up to date is from Day 3, so it will bring the Header corresponding to this day.

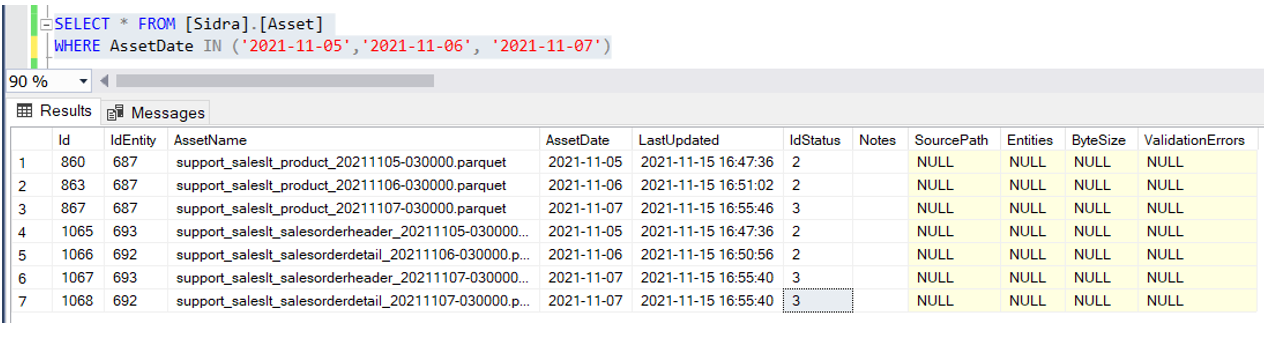

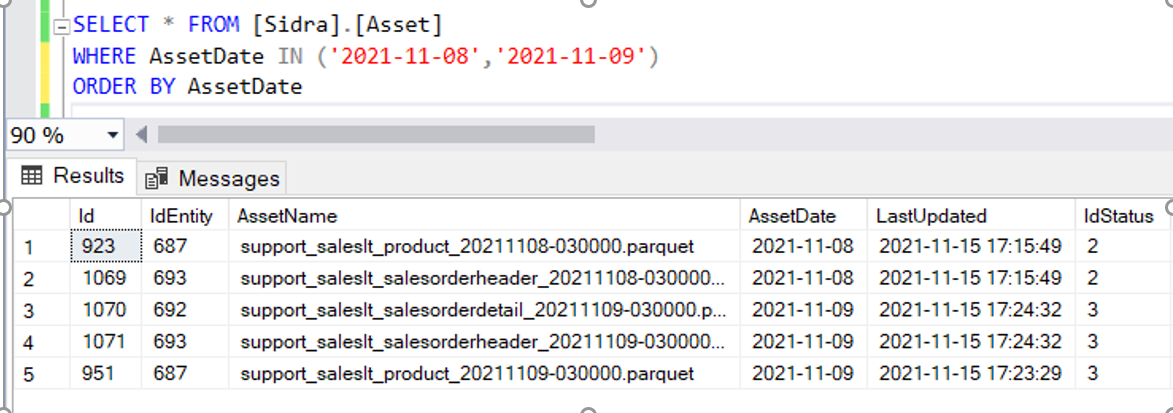

This is what the Asset table will have in the Data Product metadata table, for Day 1, Day 2 and Day 3:

We can see several Assets each date, depending on whether an Asset was generated (because there were changes in the corresponding Entity) or not. So:

- On Day 1, there is one Header Asset, just on the DSU (AssetStatus = 2), but did not reach the Data Product.

- On Day 2, there is one Detail Asset, just on the DSU (AssetStatus = 2), but did not reach the Data Product.

- On Day 3, there is both one Header Asset and one Detail Asset (AssetStatus = 3), that reached the Data Product.

Status 2 means MovedToDataStorageUnit, and Status 3 means ImportedFromDataStorageUnit.

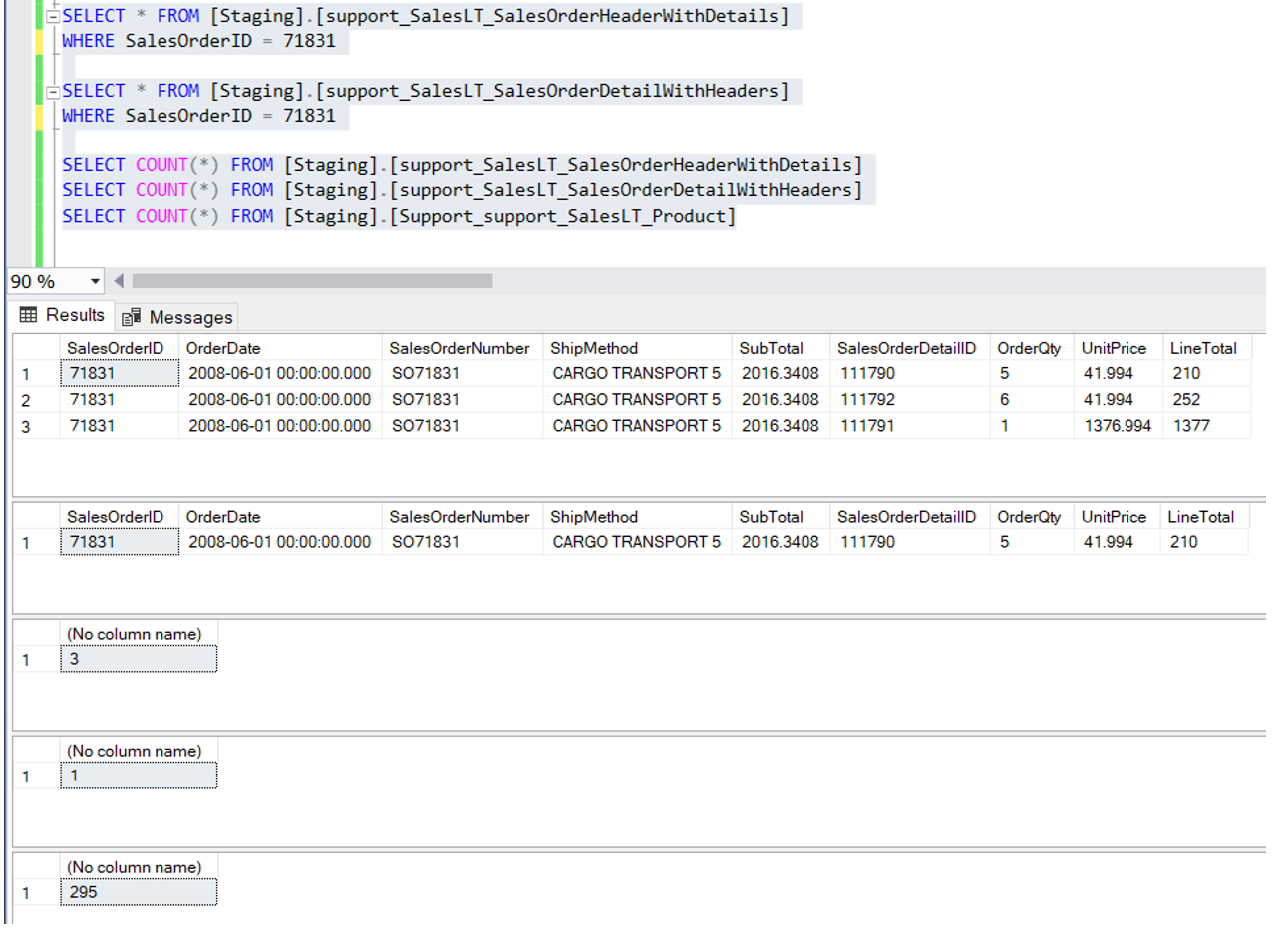

And this is what we will see in the Staging tables:

We can see that, on Day 3:

- In

SalesOrderHeaderWithDetails, we have a total number of 3 records. This is because this table is generated by the left join of Headers on Detail. Headers has a change (therefore, the three Detail records associated to that Header), and Details also. - In the

SalesOrderDetailWithHeaderswe only have 1 record, corresponding to the single update that we had on Day 2 on the Detail table. This is because this table is generated by the left join of Detail on Headers: Details has one change, but Header not.

In the Databricks of the Data Product we won't have the versions of each day, as the Sync has not triggered the Data Product pipeline each day, and the Assets were all defined as mandatory.

Scenario 2.2. Accumulated loads. Some Loads fail but the Asset is generated. The Sync executes the Data Product pipeline¶

In this additional scenario, executed after the scenario 2.1, we will change the configuration of the data extraction pipeline so that:

- "CreateAssetsWithNoData" =

falseduring Day 1 (2021-11-08). This is to simulate here that the Asset is not being generated that day because of any error in the Sidra Service data intake. - "CreateAssetsWithNoData" =

trueduring Day 2 (2021-11-09).

In this scenario, these are the events that we simulate:

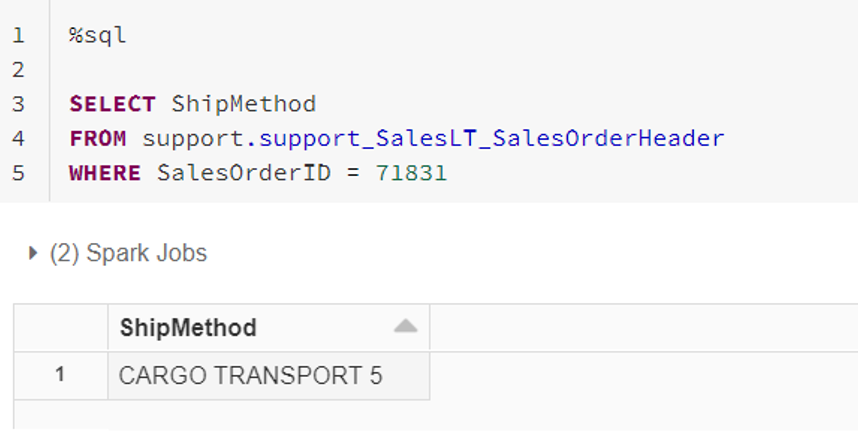

- There is a change (increment) on Header on Day 1 (AssetDate=

2021-11-08). An Asset is generated for Header, but not for Detail. However, the Sync will not execute the pipeline because there are no Assets for all the mandatory tables (Entities). - There are no changes for any table (Header nor Detail) on day 2 (AssetDate=

2021-11-09). Empty Assets are generated for Header and for Detail. The Sync will execute the pipeline because there are Assets for all the mandatory tables (Entities).

This means that in Assets table we will see:

- On day 1, there is one Asset for Header, just on the Data Lake (Status = 2), but not on the Data Product.

- On Day 2, there is both one Asset for Header and one for Detail on Day 2 (Status = 3), and they reach the Data Product. This is because there were empty Assets generated on Day 2 for both tables.

These are the changes in Staging on Day 2:

So, basically we will see that:

- In

SalesOrderHeaderWithDetails, we have a total number of 0 records. This is because:- On day 1, the Sync did not run, so it did not bring the incremental load on Header table.

- On day 2 there were no changes on Header table.

- In the

SalesOrderDetailWithHeaderswe have also 0 records. This is because, although the Sync DID run:- On day 2 there was no change in Detail header.

In Databricks in the Data Product we will have the version just on Day 2, because the Data Product pipeline has run on Day 2, and the Sync behaviour (LoadAllNewDates) does not load all pending Assets from all days, and both Entities are marked as mandatory.

To ensure we have all the modifications in Databricks, we need to include the past Assets from previous days. For enabling this we need to change the Sync behaviour to: LoadPendingUpToValidDate.

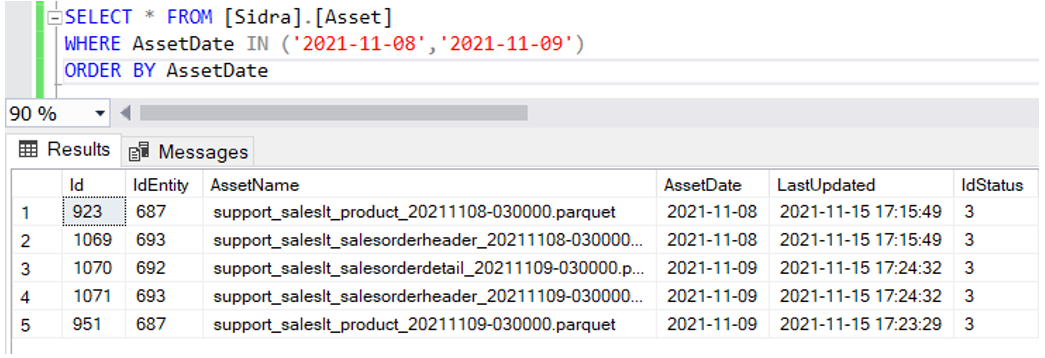

We will see how on the Assets table we have:

- On Day 1, there is one Asset for Header (Status = 3) on the Data Product. This changes from the above behaviour because the new Sync behaviour (

LoadPendingUpToValidDate) will load all Assets that were pending up to the date when all the Mandatory Assets are there. - On Day 2, there is one Asset for Header and one for Detail on Day 2 (Status = 3) on the Data Product, because there were empty Assets generated on Day 2 for both tables.

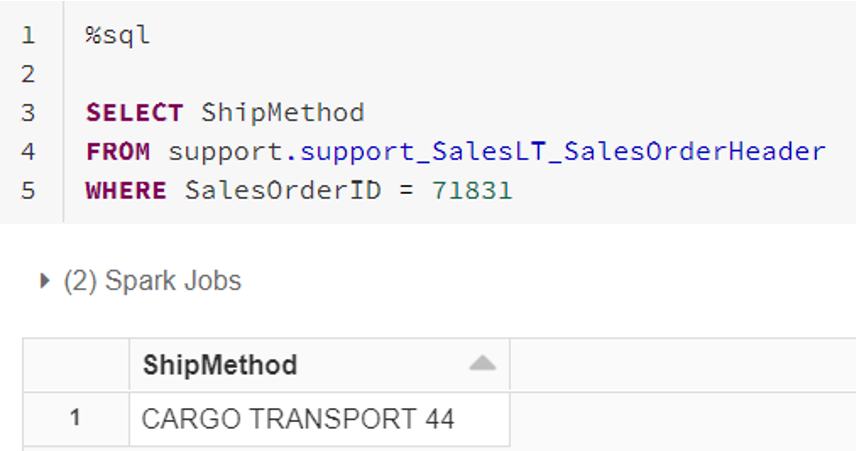

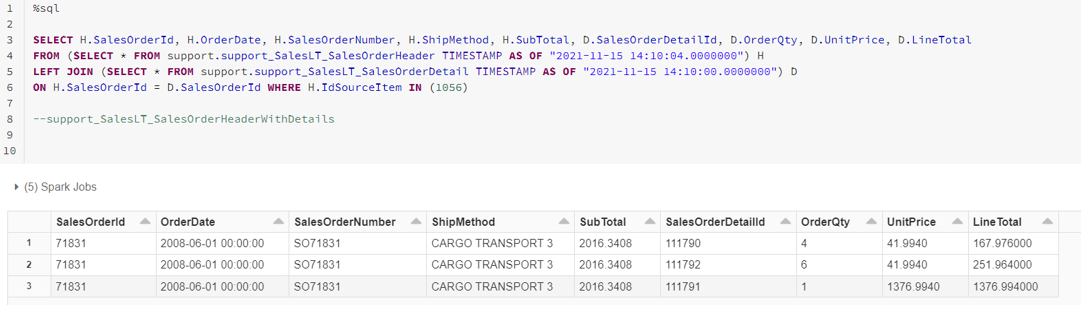

In Databricks we can see:

If we check the Staging tables, we will see:

- In

SalesOrderHeaderWithDetails, we have a total number of 3 records. This is because:- On day 1, the Sync did not run, so it did not bring the incremental load on Header table.

- On day 2, even though there were no changes on Header table, it will bring the changes of Day 1 on Header.

- In the

SalesOrderDetailWithHeaderswe have 0 records. This is because, the Sync DID run and:- On day 2 there were no changes in Detail nor Header. As there is no modification in Detail, the records will be 0.

Scenario 3¶

This scenario is for accumulated loads too. In this case, the Sync is stopped so several correct loads are stored in Sidra Service corresponding to various days.

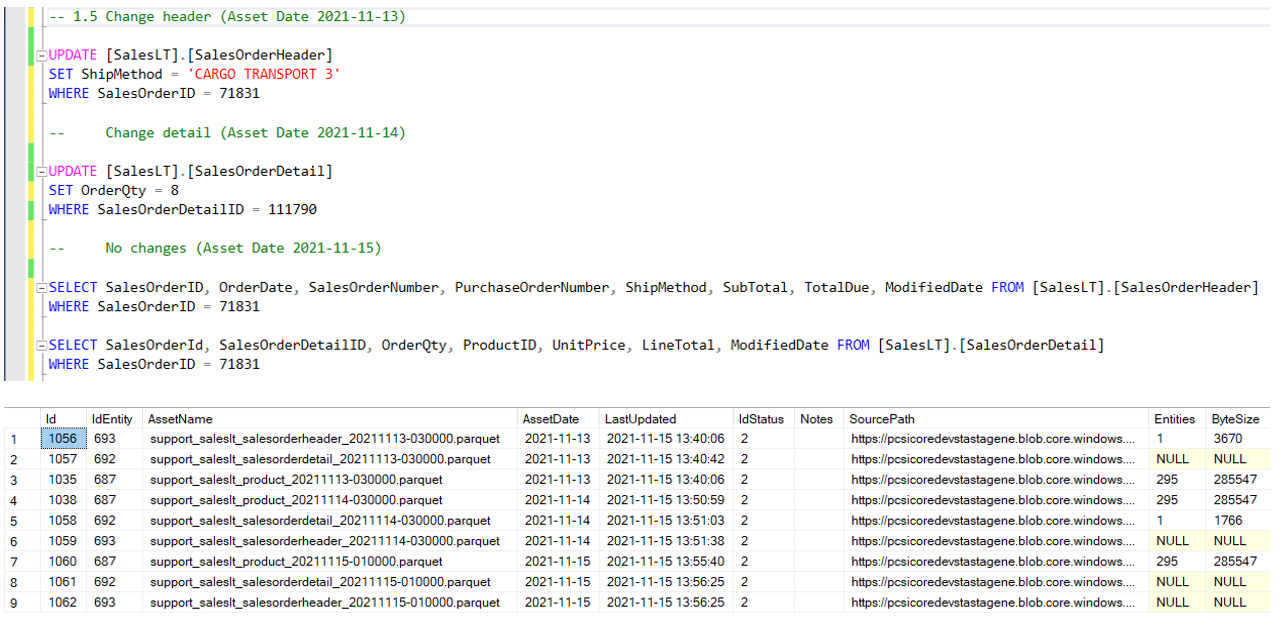

In this scenario, the Sync behaviour is set to: LoadPendingUpToValidDate, and we will simulate:

- On Day 1 (

2021-11-13) there is a change on Header. However, the Sync will not execute the pipeline because there are no Assets for all the mandatory tables. - On Day 2 (

2021-11-14) there is a change on Detail. However, the Sync will not execute the pipeline because there are no Assets for all the mandatory tables. - On Day 3 (

2021-11-15) there are changes in Header and Detail, so an Asset is generated because all mandatory Assets are there. We will also have the accumulated load and Assets of the 2 previous days, because of the Sync behaviour set to the Sync behaviour to:LoadPendingUpToValidDate. Sync executes the pipeline and loads all to the Data Product.

This means that in Assets table we will see:

Which means that:

- There is a change on Header on Day 1 (AssetDate=

2021-11-13). No Asset is generated for Header on this day. - There is a change on Detail on Day 1 (AssetDate=

2021-11-13). No Asset is generated for Detail on this day. - There is a change on Header on Day 2 (AssetDate =

2021-11-14). No Asset is generated for Header on this day. - There is a change on Detail on Day 2 (AssetDate =

2021-11-14). No Asset is generated for Detail on this day. - There is a change on Header on Day 3 (AssetDate =

2021-11-15). An Asset is generated for Header for this day and also Assets are generated for the accumulated days as well (Day 1 and Day 2). - There is a change on Detail on Day 3 (AssetDate =

2021-11-15). An Asset is generated for Detail for this day and also Assets are generated for the accumulated days as well (Day 1 and Day 2).

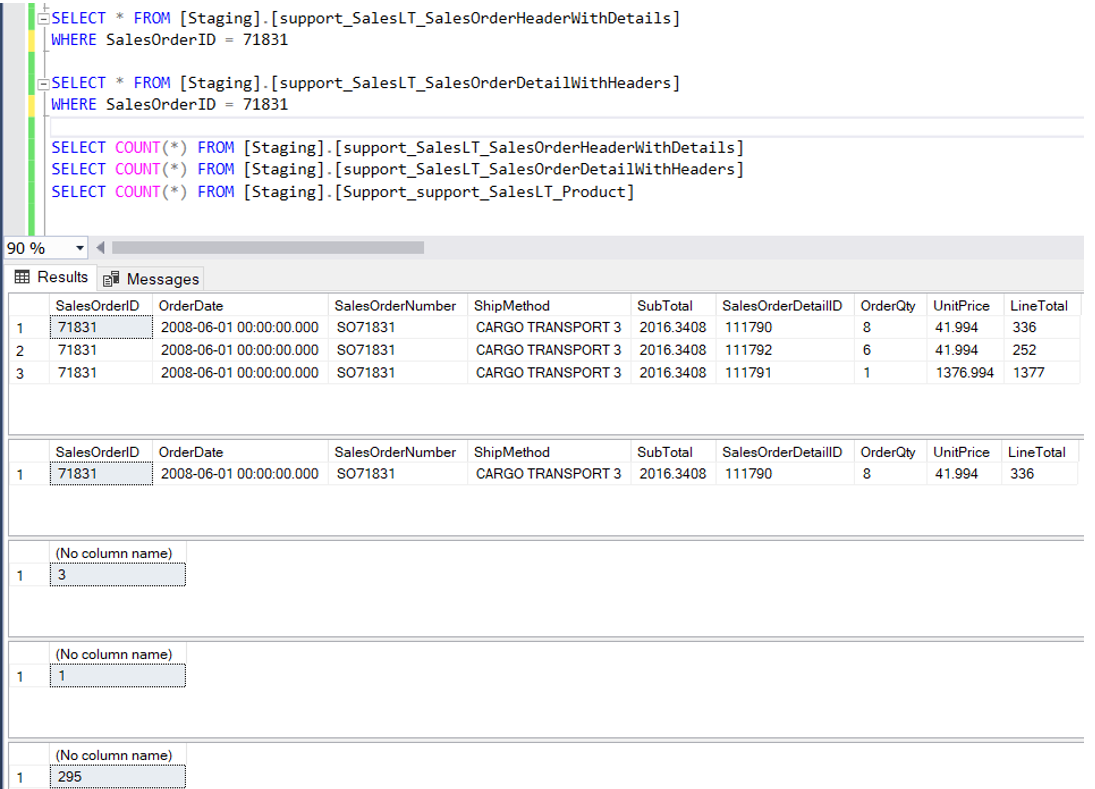

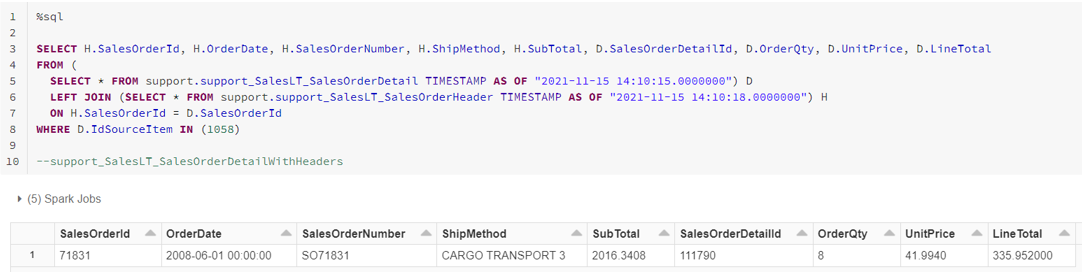

In Staging, on Day 3, we will therefore see the following:

- The most up to date Header is from Day 1 and it will bring the Details from that day.

- The most up to date Detail is from Day 2 and it will bring the Header from that day.

- In

SalesOrderHeaderWithDetails, we have a total number of 3 records. This is because:- On day 3 the Sync we brought all the Assets for both the Header and the Detail tables.

- In the

SalesOrderDetailWithHeaderswe have 1 record. This is because, the Sync DID run and:- On day 2 there was one change in Detail header, and there was one Asset matching from the previous days for Header.

In Databricks we will have the versions for each day: