Understand Sidra data lake approach¶

Unlike traditional Data Warehouses, Data Lakes store large volumes of data in its original form, whether structured or unstructured. This helps in reducing costs, by providing a unified platform for all data types and formats.

It also provides more simplicity in the data ingestion logic, shifting the paradigm from ETL to ELTs (Extract, Load, Transform). This way, each Data Product can use data and transform it according to business needs.

Sidra Data Lake approach provides the following benefits:

Data is loaded only once, in RAW format, to the system

Data is loaded just once into the system, reducing the number of actors and simplifying the entire process. Each Data Product will then transform data according to business rules and validations.

Protection against changes to the provider schemas

You can enable schema evolution so the changes in your source database will be reflected and integrated with your data, taking advantage of Sidra's schema-on-read approach. This provides flexibility and resilience to schema modifications.

Reduction in storage costs

In opposition to traditional relational storage models, a datalake approach uses clustered or cloud file systems, which enable raw data storage at a fraction of the cost.

Data lake architecture¶

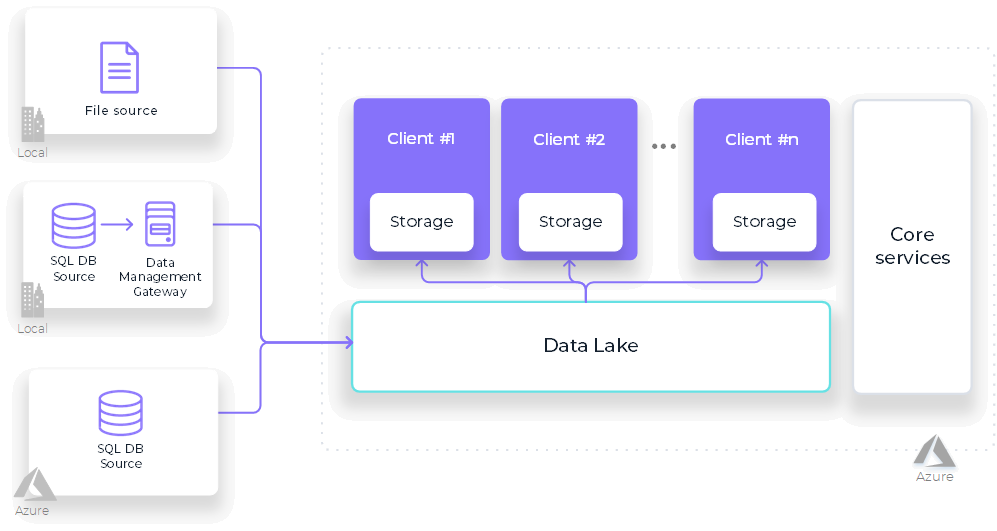

The following diagram provides a high-level view of Sidra’s data lake architecture approach:

Multiple clients access a shared storage service for their data needs, each with its application-specific storage synchronized with the main repository. Furthermore, common services like security and auditing are provided across the system.

Data storage (Azure Data Lake Storage)¶

Sidra stores all data in an optimized Databricks Delta format, ensuring it is compressed, well-partitioned, and indexed for efficient access. Azure Data Lake Storage Gen2 (ADLS Gen2) serves as the storage solution for this purpose. After ensuring data integrity and optimization, Data Products can seamlessly access and transform data following their business logic.

Data movement (Azure Data Factory)¶

To populate a data lake, essential ETL and ELT processes are necessary to fetch data from diverse sources. Additionally, internal data transfers may occur from the data lake to one or multiple data marts. Azure Data Factory (ADF) is the chosen technology for these operations. ADF is a widely deployed cloud-based data movement service, proficient in orchestrating and automating data movement and transformation across both cloud and on-premises environments.

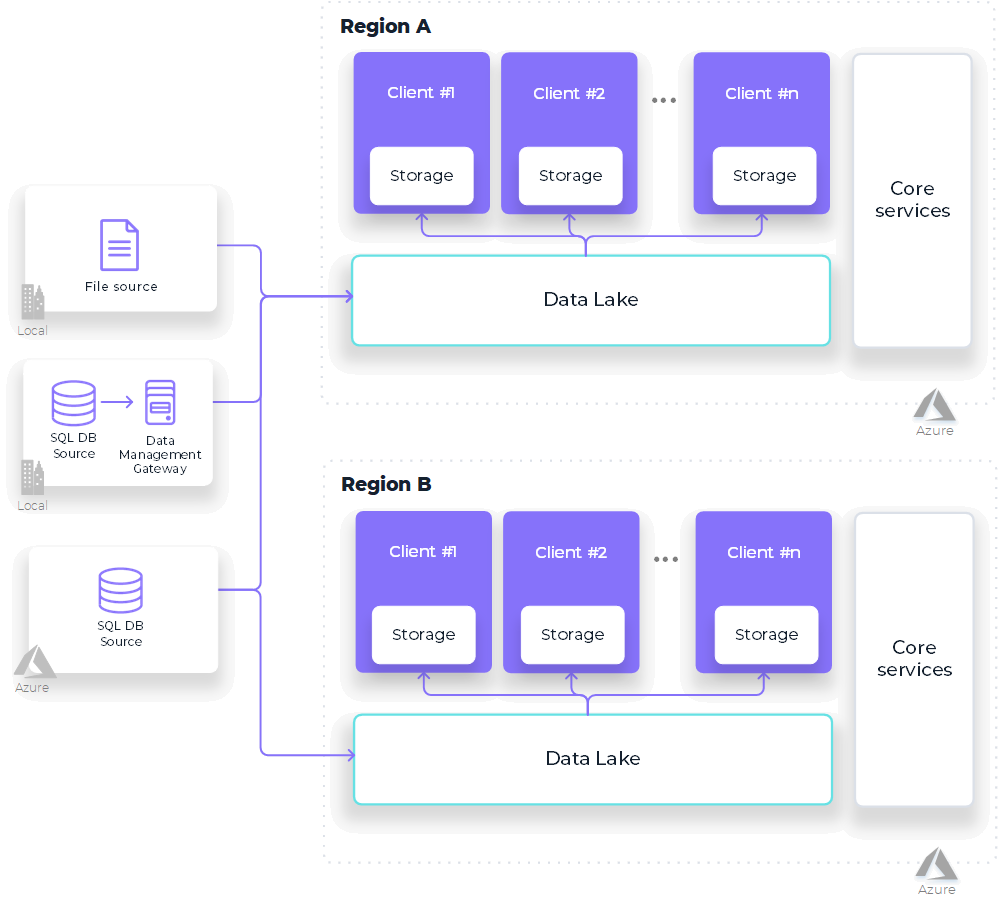

Multiple data lake regions¶

Sidra enables using one or multiple Data Storage Units (DSUs), each serving as a resource group seamlessly integrated with Sidra Service services, encompassing various functionalities related to data intake and processing.

Each DSU is designed for independent deployment and can be installed in different regions if necessary. A DSU comprises the following components:

- An ingestion zone (or landing zone).

- A raw storage.

- An optimized storage utilizing ADLS Gen 2 storage.

All DSUs benefit from Sidra Services' common services, while each DSU can have unique configurations and may even be located in different Azure regions.

This flexibility caters to diverse business needs, such as compliance with data protection regulations requiring data to be stored in specific countries. Data Products can then be configured to access data from one or multiple DSUs accordingly.