Orchestration and computation in Sidra¶

Orchestration within Sidra is managed through Azure Data Factory, while computational tasks are executed using Databricks. A detailed introduction to Azure Data Factory (ADF) or Databricks is out of the scope of the current document, but here key concepts can be found to understand the rest of the documentation provided about Sidra platform.

Additional information can be found in the official Azure Data Factory documentation and in the official Databricks documentation.

Orchestration with Data Factory¶

Data Factory is a cloud-based data integration service that allows you to create data-driven workflows in the cloud for orchestrating and automating data movement and data transformation. In ADF, we can create and schedule data-driven workflows (called pipelines) that can ingest data from disparate data stores.

How does it work¶

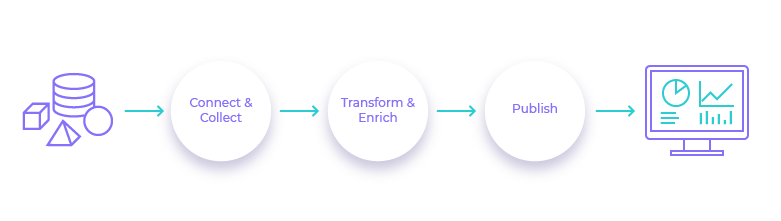

In Azure Data Factory the pipelines (data-driven workflows) typically perform the following four steps:

-

Connect & Collect

In most of the cases the enterprises have data of various types that is located in disparate sources on-premises, in the cloud, structured, unstructured, and semi-structured, all arriving at different intervals and speeds. In order to create the centralized information system for subsequent processing, all these sources need to connect and provide the data. Azure Data Factory, centralize all these connectors, avoiding in this way to build custom data movement components or write custom services to integrate these data sources and processing, which are expensive and hard to integrate and maintain such systems.

-

Transform & Enrich

Once the data is centralized, using computed services it is processed and transformed.

-

Publish

After the raw data has been refined into a business-ready consumable form, the data is loaded and publish in order to be able to be consumed by Data Products.

-

Monitor

Once the data is published it can me monitored in order to detect anomalies, improve processing and observe production.

ADF components¶

Azure Data Factory is composed of four key components that work together to provide to compose data-driven workflows with steps to move and transform data. Summarizing, these are the basic components used to create the data workflows in ADF:

- Pipelines

- Activities

- Triggers

- Connections: Linked Services and Integration Runtime

- Datasets

The ADF portal is a website that allows to manage all the components of ADF in a visual and easy way.

Moreover, every component can be defined by using a JSON file, that can be used to programmatically manage ADF. The complete definition of a data workflow can be composed by a set of JSON files. For example, this is the definition -or configuration- of a trigger that runs a pipeline when a new file is stored in an Azure Storage Blob account:

{

"name": "Core Storage Blob Created",

"properties": {

"type": "BlobEventsTrigger",

"typeProperties": {

"blobPathBeginsWith": "/landing/blobs/",

"scope": "/subscriptions/XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX/resourceGroups/Core.Dev.NorthEurope/providers/Microsoft.Storage/storageAccounts/corestadev",

"events": [

"Microsoft.Storage.BlobCreated"

]

},

"pipelines": [

{

"pipelineReference": {

"referenceName": "ingest-from-landing",

"type": "PipelineReference"

},

"parameters": {

"folderPath": "@triggerBody().folderPath",

"fileName": "@triggerBody().fileName"

}

}

]

}

}

1. Pipeline¶

Data Factory pipelines (do not mistake with the Microsoft DevOps service called Azure Pipelines) are composed by independent activities that perform one task each. When those activities are chained, it is possible to make different transformations and move the data from the source to the destination. For example, a pipeline can contain a group of activities that ingest data and then transform it by applying a query. ADF can execute multiple pipelines at same time.

The management of ADF pipelines is automatized by a key architectural piece, the Data Factory Manager. This accelerator enables programmatic management of ADF pipelines, and greatly streamlines any changes that need to happen due to new data sources or changes in the existing ones avoiding human/manual interaction.

2. Activity¶

Activities represent a processing step in a pipeline. For example, copy data from one data store to another data store. There are several tasks natively supported in Data Factory, like the copy activity, which just copies data from a source to a destination. However, there are some processes that are not supported natively in Data Factory. For custom behaviours ADF provides a type of activity named Custom Activity which allows the user to implement specific domain business logic. Custom Activities can be coded in any programming language supported by Microsoft Azure Virtual Machines (Windows or Linux).

3. Triggers¶

Pipelines can be executed manually -by means of the ADF portal, PowerShell, .NET SDK, etc.- or can be automatically executed by a Trigger. Triggers, same as pipelines, are configured in ADF and they are one of its basic components.

4. Connections: Linked Services and Integration Runtime¶

To reference the systems where the data is located (such as an SFTP server, or a SQL Server database), there are objects called Linked Services. They basically behave as if they were connection strings. Sometimes it is necessary to access some data store which is not reachable from the Internet. In this cases, it is necessary to use what is known as Integration Runtime, which a software that allows Data Factory to communicate with the data store using an environment as a gateway. In the ADF portal, linked services and integration runtime are grouped in a category called Connections.

Integration Runtimes¶

Integration Runtimes are, basically, sets of (virtual) machines that execute Data Factory pipeline activities. Post deployment, in certain scenarios, Sidra may need custom or on-premises Integration Runtimes (IRs). These will require a Java Runtime Environment on their nodes, as per Sidra's Databricks needs.

Sidra is making use of Azure's Data Factory to ingest the data, to copy it from sources in the Databricks. Data is ingested by executing Data Factory pipelines, composed of activities. The activities are being processed in Integration Runtimes.

Azure Data Factory comes with a default Azure-based Microsoft-managed AutoResolveIntegrationRuntime. Sidra, upon deployment, also adds a default Integration Runtime, although no nodes are initially added there.

There are certain setups - like those blocking firewall traversal - that would prevent activities from running in the Data Factory's default AutoResolveIntegrationRuntime. In such situations, custom or on-premises nodes would need to be added: to the Sidra's default Integration Runtime or any other additional one.

Adding Integration Runtime nodes¶

An Integration Runtime is, basically, a set nodes for activity execution. To understand how it works, we only ask "How is Data Factory pushing activities on the Integration Runtime nodes?"

First, an agent gets installed on the machine that would become a node. Then, we "tell" that agent "how" to connect to the Data Factory to receive workload, activities to execute. Basically, we configure the agent with an endpoint and a security key: through that endpoint, the agent connects to the Data Factory to receive workload. This connectivity info is found in the Integration Runtime's key(s).

Change the Integration Runtime

Once a node was added to an Integration Runtime, the UI of the agent on the node does not allow re-assigning the node to a different Integration Runtime set.

But the agent does come with a PowerShell script allowing to re-register the node:

C:\Program Files\Microsoft Integration Runtime\5.0\PowerShellScript\RegisterIntegrationRuntime.ps1

Find in the product documentation the details about creating an Integration Runtime set and adding nodes to it.

Step-by-step

Integration Runtimes using OpenJDK

More information about integration runtimes and OpenJDK implementation can be checked in this tutorial .

5. Dataset¶

To reference data inside a system (for example, a file in an SFTP server or a table in a SQL Server database), there are objects called Datasets. The activities can reference zero or more datasets (it depends what type of activity is used).

Data Factory Manager¶

The management of all the ADF components is automatized by a key architectural piece in Sidra, the Data Factory Manager. This accelerator enables programmatic management of ADF components, and greatly streamlines any changes that need to happen due to new data sources or changes in the existing ones.

In order to achieve that, it uses the information stored in the Sidra Service database about the ADF components that are predefined in the Sidra platform. Those "predefined" components are templates that contain placeholders. When the placeholders are resolved with actual values, the resulting component can be used in ADF.

Sidra platform provides a set of predefined ADF components that are used to create the workflows to ingest the data from the sources into the platform and also to move data from Sidra Service into the Data Products.

Computation with Databricks¶

Azure Databricks is an Apache Spark Analytics platform optimized for Azure, it is compatible with other Azure services like SQL Data Warehouse, Power BI, Microsoft Entra ID or Azure Storage.

As any other Azure resource, it can be created from the Azure Portal or using the Azure Resource Manager (ARM) by means of ARM templates.

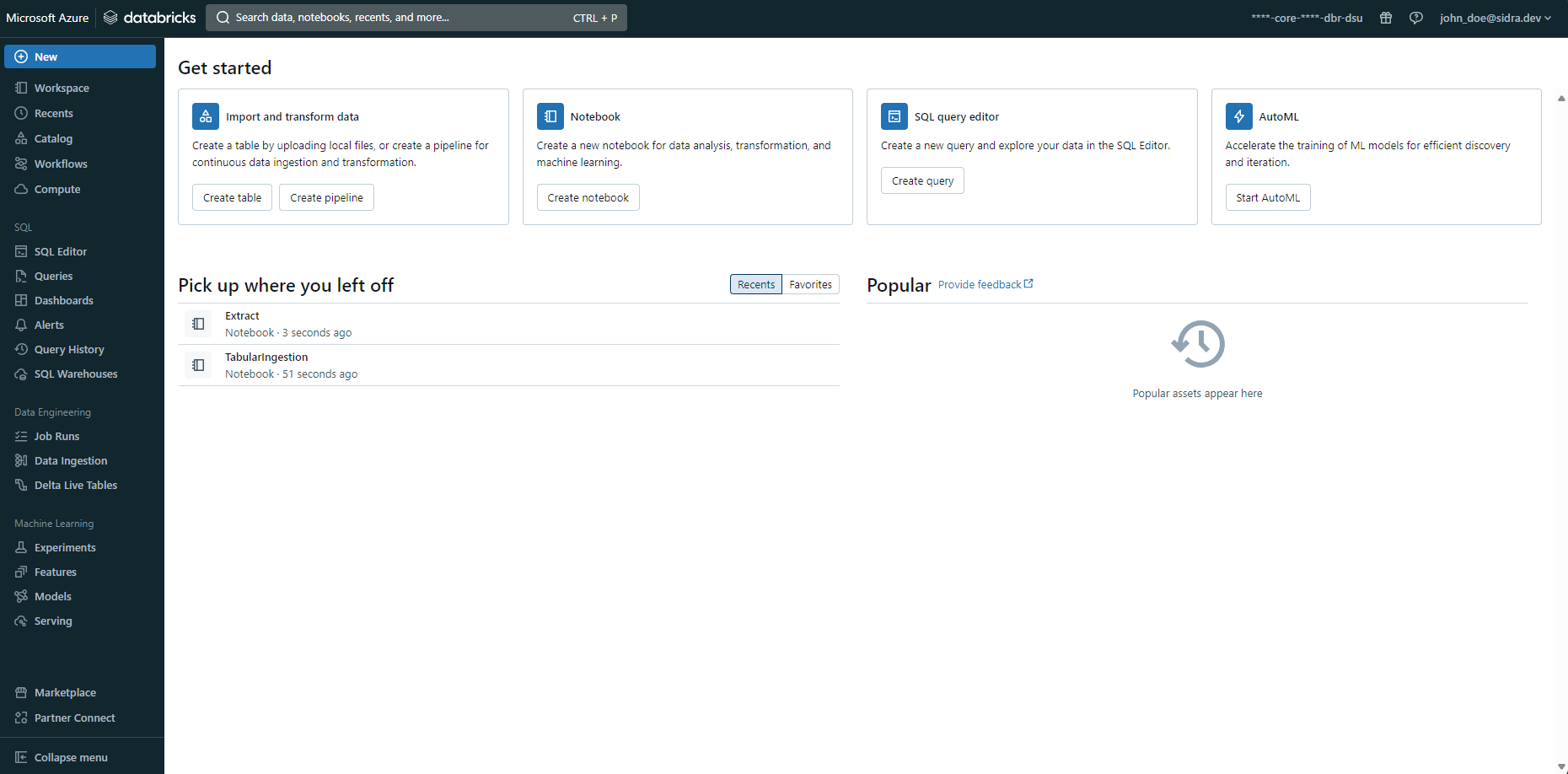

Workspace¶

A workspace is an environment for accessing all the user's Azure Databricks objects. The workspace organizes these objects into folders. Additionally, there are two special folders:

- Shared is for sharing objects across the organization

- Users contains a folder for each registered user

From the Azure Portal, it can be launched the Azure Databricks UI -a web portal- with the user workspace selected. The same user from the Azure portal is used in Azure Databricks UI due to the integration with Microsoft Entra ID.

The objects that can be contained in a folder are Dashboards, Libraries, Experiments, Notebooks and Databricks File System (DBFS), being the two lasts the most interesting for Sidra:

- A Notebook is a web-based interface to documents that contain runnable commands, visualizations, and narrative text. When Sidra wants to run a script in Databricks, it uses a Notebook that references the script.

- Databricks File System (DBFS) is the filesystem abstraction layer over a blob store. Sidra platform uses DBFS to store the script that will be executed by a Notebook.

Data Management¶

The objects that hold the data in which analytics is performed are Database, Table, Partition and Metastore. Sidra creates the appropriate database, tables and partitions for the data based on the Metadata stored in Sidra Service about that data and using two scripts:

-

Table creation notebook creates the database, tables and partitions.

-

DSU Ingestion script inserts the data in the objects created by CreateTable.py.

Computation Management¶

Clusters and Jobs are the concepts related to running analytic computations in Azure Databricks.

-

Cluster is a set of computation resources and configurations on which you run notebooks and jobs. The cluster is automatically started when a job is executed and can be configured to terminate after a period of inactivity, so it allows to reduce the cost of the resource.

-

Job is a non-interactive mechanism for running a notebook. Since a notebook is web-based UI, Sidra uses jobs to run notebooks.